Autonomous Justification for Enabling Explainable Decision Support in Human-Robot Teaming

Matthew Luebbers*, Aaquib Tabrez*, Kyler Ruvane*, and Bradley Hayes

Abstract

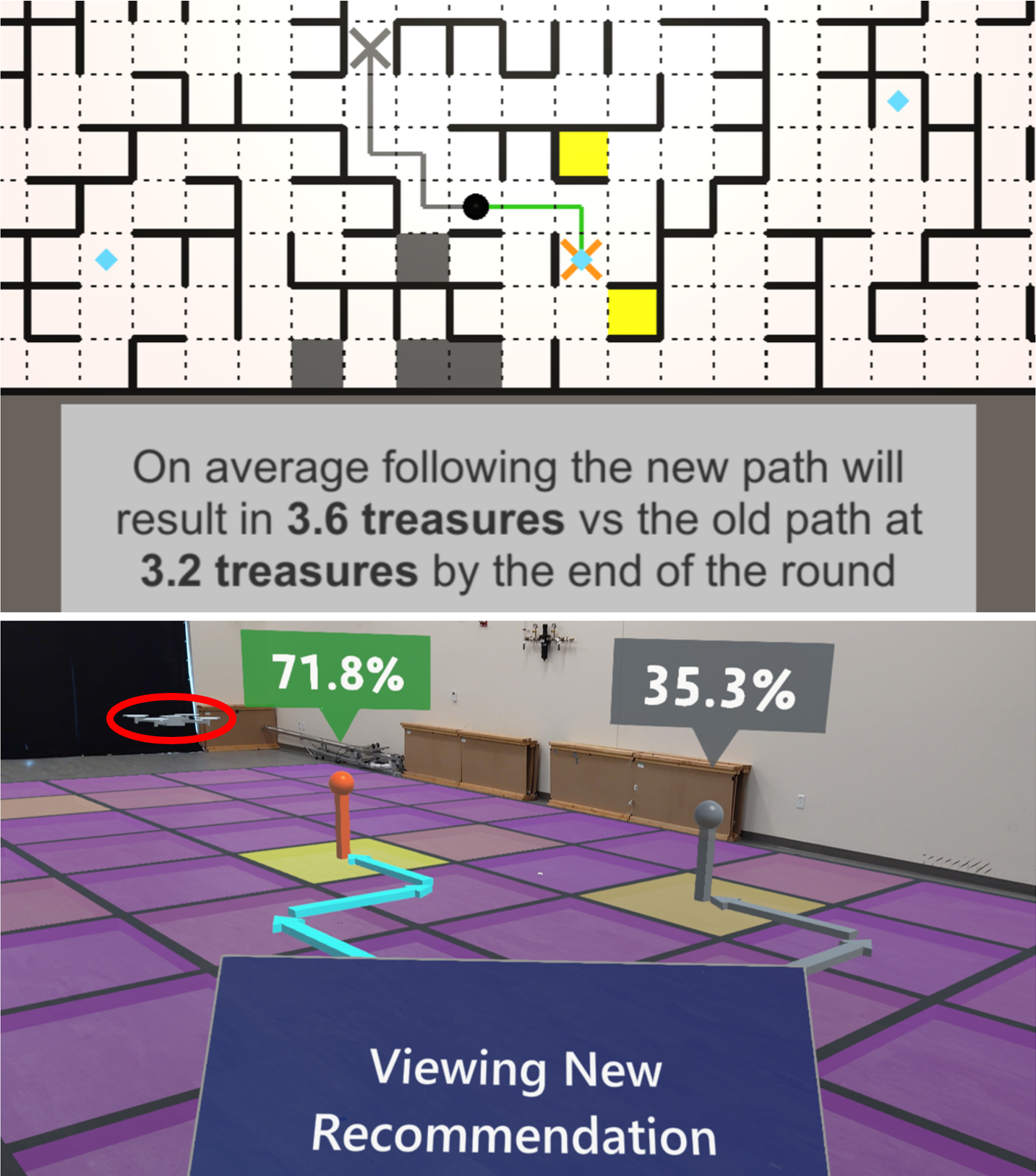

Justification is an important facet of policy explanation, a process for describing the behavior of an autonomous system. In human-robot collaboration, an autonomous agent can attempt to justify distinctly important decisions by offering explanations as to why those decisions are right or reasonable, leveraging a snapshot of its internal reasoning to do so. Without sufficient insight into a robot's decision-making process, it becomes challenging for users to trust or comply with those important decisions, especially when they are viewed as confusing or contrary to the user's expectations (e.g., when decisions change as new information is introduced to the agent's decision-making process). In this work we characterize the benefits of justification within the context of decision-support during human-robot teaming (i.e., agents giving recommendations to human teammates). We introduce a formal framework using value of information theory to strategically time justifications during periods of misaligned expectations for greater effect. We also characterize four different types of counterfactual justification derived from established explainable AI literature and evaluate them against each other in a human-subjects study involving a collaborative, partially observable search task. Based on our findings, we present takeaways on the effective use of different types of justifications in human-robot teaming scenarios, to improve user compliance and decision-making by strategically influencing human teammate thinking patterns. Finally, we present an augmented reality system incorporating these findings into a real-world decision-support system for human-robot teaming.

The paper can be accessed here, and from our Publications tab.